Please log in to read this in our online viewer!

Please log in to read this in our online viewer!

No comments yet. You can be the first!

Content extract

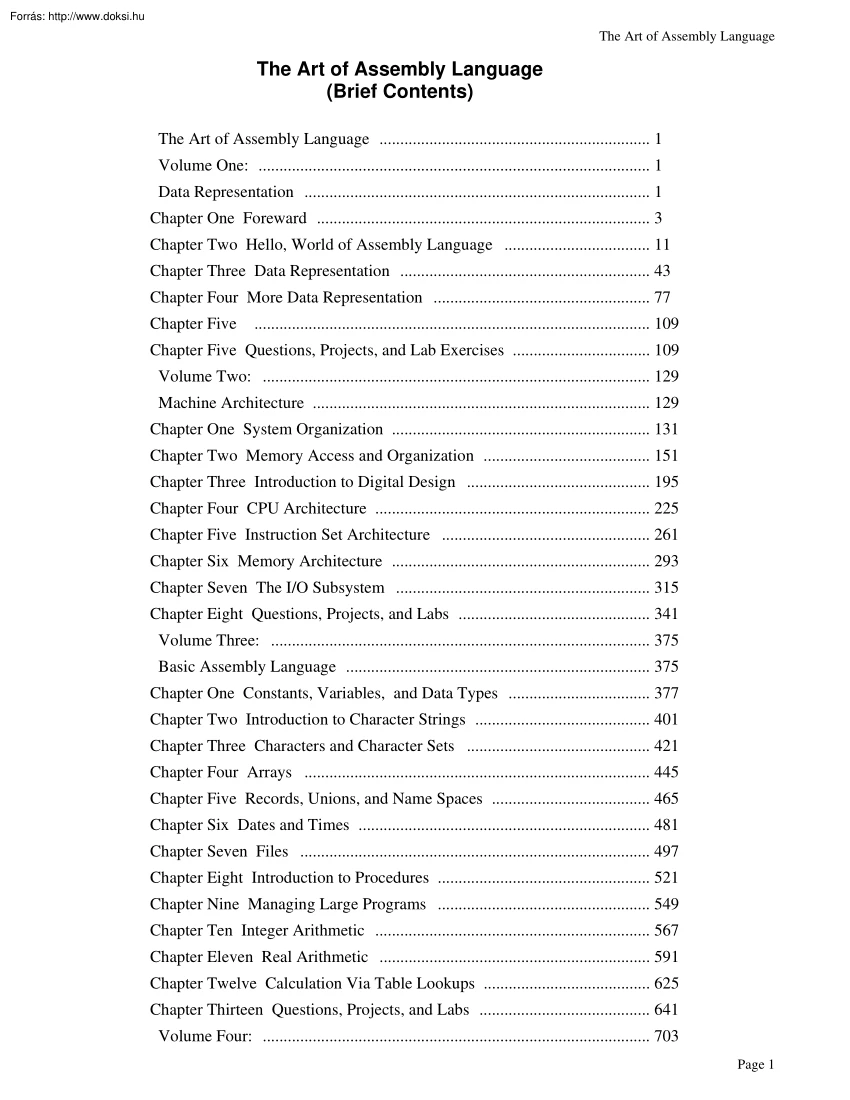

The Art of Assembly Language The Art of Assembly Language (Brief Contents) The Art of Assembly Language . 1 Volume One: . 1 Data Representation . 1 Chapter One Foreward . 3 Chapter Two Hello, World of Assembly Language . 11 Chapter Three Data Representation . 43 Chapter Four More Data Representation . 77 Chapter Five . 109 Chapter Five Questions, Projects, and Lab Exercises . 109 Volume Two: . 129 Machine Architecture . 129 Chapter One System Organization . 131 Chapter Two Memory Access and Organization . 151 Chapter Three Introduction to Digital Design . 195 Chapter Four CPU Architecture . 225 Chapter Five Instruction Set Architecture . 261 Chapter Six Memory Architecture . 293 Chapter Seven The I/O Subsystem . 315 Chapter Eight Questions, Projects, and Labs . 341 Volume Three: . 375 Basic Assembly Language . 375 Chapter One Constants, Variables, and Data Types . 377 Chapter Two Introduction to Character Strings . 401 Chapter Three Characters and Character Sets . 421 Chapter Four

Arrays . 445 Chapter Five Records, Unions, and Name Spaces . 465 Chapter Six Dates and Times . 481 Chapter Seven Files . 497 Chapter Eight Introduction to Procedures . 521 Chapter Nine Managing Large Programs . 549 Chapter Ten Integer Arithmetic . 567 Chapter Eleven Real Arithmetic . 591 Chapter Twelve Calculation Via Table Lookups . 625 Chapter Thirteen Questions, Projects, and Labs . 641 Volume Four: . 703 Page 1 Intermediate Assembly Language . 703 Chapter One Advanced High Level Control Structures . 705 Chapter Two Low-Level Control Structures . 729 Chapter Three Intermediate Procedures . 781 Chapter Four Advanced Arithmetic . 827 Chapter Five Bit Manipulation . 881 Chapter Six The String Instructions . 907 Chapter Seven The HLA Compile-Time Language . 921 Chapter Eight Macros . 941 Chapter Nine Domain Specific Embedded Languages . 975 Chapter Ten Classes and Objects . 1029 Chapter Eleven The MMX Instruction Set . 1083 Chapter Twelve Mixed Language Programming . 1119 Chapter

Thirteen Questions, Projects, and Labs . 1163 Section Five . 1245 Section Five Advanced Assembly Language Programming . 1245 Chapter One Thunks . 1247 Chapter Two Iterators . 1271 Chapter Three Coroutines and Generators . 1293 Chapter Four Low-level Parameter Implementation . 1305 Chapter Five Lexical Nesting . 1337 Chapter Six Questions, Projects, and Labs . 1359 Appendix A Answers to Selected Exercises . 1365 Appendix B Console Graphic Characters . 1367 Appendix D The 80x86 Instruction Set . 1409 Appendix E The HLA Language Reference . 1437 Appendix F The HLA Standard Library Reference . 1439 Appendix G HLA Exceptions . 1441 Appendix H HLA Compile-Time Functions . 1447 Appendix I Installing HLA on Your System . 1477 Appendix J Debugging HLA Programs . 1501 Appendix K Comparing HLA and MASM . 1505 Appendix L HLA Code Generation for HLL Statements . 1507 Index . 1 Page 2 Hello, World of Assembly Language The Art of Assembly Language (Full Contents) • Foreward to the HLA

Version of “The Art of Assembly.” 3 • Intended Audience . 6 • Teaching From This Text . 6 • Copyright Notice . 7 • How to Get a Hard Copy of This Text . 8 • Obtaining Program Source Listings and Other Materials in This Text . 8 • Where to Get Help . 8 • Other Materials You Will Need . 9 2.0 Chapter Overview 11 2.1 The Anatomy of an HLA Program 11 2.2 Some Basic HLA Data Declarations 12 2.3 Boolean Values 14 2.4 Character Values 15 2.5 An Introduction to the Intel 80x86 CPU Family 15 2.6 Some Basic Machine Instructions 18 2.7 Some Basic HLA Control Structures 21 2.71 Boolean Expressions in HLA Statements 21 2.72 The HLA IFTHENELSEIFELSEENDIF Statement 23 2.73 The WHILEENDWHILE Statement 24 2.74 The FORENDFOR Statement 25 2.75 The REPEATUNTIL Statement 26 2.76 The BREAK and BREAKIF Statements 27 2.77 The FOREVERENDFOR Statement 27 2.78 The TRYEXCEPTIONENDTRY Statement 28 2.8 Introduction to the HLA Standard Library 29 2.81 Predefined Constants in the

STDIO Module 30 2.82 Standard In and Standard Out 31 2.83 The stdoutnewln Routine 31 2.84 The stdoutputiX Routines 31 2.85 The stdoutputiXSize Routines 32 2.86 The stdoutput Routine 33 2.87 The stdingetc Routine 34 2.88 The stdingetiX Routines 35 2.89 The stdinreadLn and stdinflushInput Routines 36 2.810 The stdinget Macro 37 2.9 Putting It All Together 38 2.10 Sample Programs 38 2.101 Powers of Two Table Generation 38 2.102 Checkerboard Program 39 Beta Draft - Do not distribute 2001, By Randall Hyde Page 1 AoATOC.fm 2.103 Fibonocci Number Generation 41 3.1 Chapter Overview 43 3.2 Numbering Systems 43 3.21 A Review of the Decimal System 43 3.22 The Binary Numbering System 44 3.23 Binary Formats 45 3.3 Data Organization 46 3.31 Bits 46 3.32 Nibbles 46 3.33 Bytes 47 3.34 Words 48 3.35 Double Words 49 3.4 The Hexadecimal Numbering System 50 3.5 Arithmetic Operations on Binary and Hexadecimal Numbers 52 3.6 A Note About Numbers vs Representation 53 3.7

Logical Operations on Bits 55 3.8 Logical Operations on Binary Numbers and Bit Strings 57 3.9 Signed and Unsigned Numbers 59 3.10 Sign Extension, Zero Extension, Contraction, and Saturation 63 3.11 Shifts and Rotates 66 3.12 Bit Fields and Packed Data 71 3.13 Putting It All Together 74 4.1 Chapter Overview 77 4.2 An Introduction to Floating Point Arithmetic 77 4.21 IEEE Floating Point Formats 80 4.22 HLA Support for Floating Point Values 83 4.3 Binary Coded Decimal (BCD) Representation 85 4.4 Characters 86 4.41 The ASCII Character Encoding 87 4.42 HLA Support for ASCII Characters 90 4.43 The ASCII Character Set 93 4.5 The UNICODE Character Set 98 4.6 Other Data Representations 98 4.61 Representing Colors on a Video Display 98 4.62 Representing Audio Information 100 4.63 Representing Musical Information 104 4.64 Representing Video Information 105 4.65 Where to Get More Information About Data Types 105 4.7 Putting It All Together 106 5.1 Questions 109 5.2

Programming Projects for Chapter Two 114 5.5 Laboratory Exercises for Chapter Two 117 5.51 A Short Note on Laboratory Exercises and Lab Reports 117 5.52 Installing the HLA Distribution Package 117 Page 2 2001, By Randall Hyde Beta Draft - Do not distribute Hello, World of Assembly Language 5.53 What’s Included in the HLA Distribution Package 119 5.54 Using the HLA Compiler 121 5.55 Compiling Your First Program 121 5.56 Compiling Other Programs Appearing in this Chapter 123 5.57 Creating and Modifying HLA Programs 123 5.58 Writing a New Program 124 5.59 Correcting Errors in an HLA Program 125 5.510 Write Your Own Sample Program 125 5.6 Laboratory Exercises for Chapter Three and Chapter Four 126 5.61 Data Conversion Exercises 126 5.62 Logical Operations Exercises 127 5.63 Sign and Zero Extension Exercises 127 5.64 Packed Data Exercises 128 5.65 Running this Chapter’s Sample Programs 128 5.66 Write Your Own Sample Program 128 1.1 Chapter Overview 131 1.2

The Basic System Components 131 1.21 The System Bus 132 1.211 The Data Bus 132 1.212 The Address Bus 133 1.213 The Control Bus 134 1.22 The Memory Subsystem 135 1.23 The I/O Subsystem 141 1.3 HLA Support for Data Alignment 141 1.4 System Timing 144 1.41 The System Clock 144 1.42 Memory Access and the System Clock 145 1.43 Wait States 146 1.44 Cache Memory 147 1.5 Putting It All Together 150 2.1 Chapter Overview 151 2.2 The 80x86 Addressing Modes 151 2.21 80x86 Register Addressing Modes 151 2.22 80x86 32-bit Memory Addressing Modes 152 2.221 The Displacement Only Addressing Mode 152 2.222 The Register Indirect Addressing Modes 153 2.223 Indexed Addressing Modes 154 2.224 Variations on the Indexed Addressing Mode 155 2.225 Scaled Indexed Addressing Modes 157 2.226 Addressing Mode Wrap-up 158 2.3 Run-Time Memory Organization 158 2.31 The Code Section 159 2.32 The Read-Only Data Section 160 2.33 The Storage Section 161 2.34 The Static Sections 161 2.35 The

NOSTORAGE Attribute 162 2.36 The Var Section 162 2.37 Organization of Declaration Sections Within Your Programs 163 Beta Draft - Do not distribute 2001, By Randall Hyde Page 3 AoATOC.fm 2.4 Address Expressions 164 2.5 Type Coercion 166 2.6 Register Type Coercion 168 2.7 The Stack Segment and the Push and Pop Instructions 169 2.71 The Basic PUSH Instruction 169 2.72 The Basic POP Instruction 170 2.73 Preserving Registers With the PUSH and POP Instructions 172 2.74 The Stack is a LIFO Data Structure 172 2.75 Other PUSH and POP Instructions 175 2.76 Removing Data From the Stack Without Popping It 176 2.77 Accessing Data You’ve Pushed on the Stack Without Popping It 178 2.8 Dynamic Memory Allocation and the Heap Segment 180 2.9 The INC and DEC Instructions 183 2.10 Obtaining the Address of a Memory Object 183 2.11 Bonus Section: The HLA Standard Library CONSOLE Module 184 2.111 Clearing the Screen 184 2.112 Positioning the Cursor 185 2.113 Locating the Cursor

186 2.114 Text Attributes 188 2.115 Filling a Rectangular Section of the Screen 190 2.116 Console Direct String Output 191 2.117 Other Console Module Routines 193 2.12 Putting It All Together 193 3.1 Chapter Overview 195 3.2 Boolean Algebra 195 3.3 Boolean Functions and Truth Tables 197 3.4 Algebraic Manipulation of Boolean Expressions 200 3.5 Canonical Forms 201 3.6 Simplification of Boolean Functions 206 3.7 What Does This Have To Do With Computers, Anyway? 213 3.71 Correspondence Between Electronic Circuits and Boolean Functions 213 3.72 Combinatorial Circuits 215 3.73 Sequential and Clocked Logic 220 3.8 Okay, What Does It Have To Do With Programming, Then? 223 3.9 Putting It All Together 224 4.1 Chapter Overview 225 4.2 The History of the 80x86 CPU Family 225 4.3 A History of Software Development for the x86 231 4.4 Basic CPU Design 235 4.5 Decoding and Executing Instructions: Random Logic Versus Microcode 237 4.6 RISC vs CISC vs VLIW 238 4.7 Instruction

Execution, Step-By-Step 240 4.8 Parallelism – the Key to Faster Processors 242 Page 4 2001, By Randall Hyde Beta Draft - Do not distribute Hello, World of Assembly Language 4.81 The Prefetch Queue – Using Unused Bus Cycles 245 4.82 Pipelining – Overlapping the Execution of Multiple Instructions 249 4.821 A Typical Pipeline 249 4.822 Stalls in a Pipeline 251 4.83 Instruction Caches – Providing Multiple Paths to Memory 252 4.84 Hazards 254 4.85 Superscalar Operation– Executing Instructions in Parallel 255 4.86 Out of Order Execution 257 4.87 Register Renaming 257 4.88 Very Long Instruction Word Architecture (VLIW) 258 4.89 Parallel Processing 258 4.810 Multiprocessing 259 4.9 Putting It All Together 260 5.1 Chapter Overview 261 5.2 The Importance of the Design of the Instruction Set 261 5.3 Basic Instruction Design Goals 262 5.4 The Y86 Hypothetical Processor 267 5.41 Addressing Modes on the Y86 269 5.42 Encoding Y86 Instructions 270 5.43 Hand

Encoding Instructions 272 5.44 Using an Assembler to Encode Instructions 275 5.45 Extending the Y86 Instruction Set 276 5.5 Encoding 80x86 Instructions 277 5.51 Encoding Instruction Operands 279 5.52 Encoding the ADD Instruction: Some Examples 284 5.53 Encoding Immediate Operands 289 5.54 Encoding Eight, Sixteen, and Thirty-Two Bit Operands 290 5.55 Alternate Encodings for Instructions 290 5.6 Putting It All Together 290 6.1 Chapter Overview 293 6.2 The Memory Hierarchy 293 6.3 How the Memory Hierarchy Operates 295 6.4 Relative Performance of Memory Subsystems 296 6.5 Cache Architecture 297 6.6 Virtual Memory, Protection, and Paging 302 6.7 Thrashing 304 6.8 NUMA and Peripheral Devices 305 6.9 Segmentation 305 6.10 Segments and HLA 306 6.11 User Defined Segments in HLA 309 6.12 Controlling the Placement and Attributes of Segments in Memory 310 6.13 Putting it All Together 314 7.1 Chapter Overview 315 7.2 Connecting a CPU to the Outside World 315 Beta Draft -

Do not distribute 2001, By Randall Hyde Page 5 AoATOC.fm 7.3 Read-Only, Write-Only, Read/Write, and Dual I/O Ports 316 7.4 I/O (Input/Output) Mechanisms 318 7.41 Memory Mapped Input/Output 318 7.42 I/O Mapped Input/Output 319 7.43 Direct Memory Access 320 7.5 I/O Speed Hierarchy 320 7.6 System Busses and Data Transfer Rates 321 7.7 The AGP Bus 323 7.8 Buffering 323 7.9 Handshaking 324 7.10 Time-outs on an I/O Port 326 7.11 Interrupts and Polled I/O . 327 7.12 Using a Circular Queue to Buffer Input Data from an ISR 329 7.13 Using a Circular Queue to Buffer Output Data for an ISR 334 7.14 I/O and the Cache 336 7.15 Windows and Protected Mode Operation 337 7.16 Device Drivers 338 7.17 Putting It All Together 338 8.1 Questions 341 8.2 Programming Projects 347 8.3 Chapters One and Two Laboratory Exercises 349 8.31 Memory Organization Exercises 349 8.32 Data Alignment Exercises 350 8.33 Readonly Segment Exercises 353 8.34 Type Coercion Exercises 353 8.35

Dynamic Memory Allocation Exercises 354 8.4 Chapter Three Laboratory Exercises 355 8.41 Truth Tables and Logic Equations Exercises 356 8.42 Canonical Logic Equations Exercises 357 8.43 Optimization Exercises 358 8.44 Logic Evaluation Exercises 358 8.5 Laboratory Exercises for Chapters Four, Five, Six, and Seven 363 8.51 The SIMY86 Program – Some Simple Y86 Programs 363 8.52 Simple I/O-Mapped Input/Output Operations 366 8.53 Memory Mapped I/O 367 8.54 DMA Exercises 368 8.55 Interrupt Driven I/O Exercises 369 8.56 Machine Language Programming & Instruction Encoding Exercises 369 8.57 Self Modifying Code Exercises 371 8.58 Virtual Memory Exercise 373 1.1 Chapter Overview 377 1.2 Some Additional Instructions: INTMUL, BOUND, INTO 377 1.3 The QWORD and TBYTE Data Types 381 1.4 HLA Constant and Value Declarations 381 Page 6 2001, By Randall Hyde Beta Draft - Do not distribute Hello, World of Assembly Language 1.41 Constant Types 384 1.42 String and Character

Literal Constants 385 1.43 String and Text Constants in the CONST Section 386 1.44 Constant Expressions 387 1.45 Multiple CONST Sections and Their Order in an HLA Program 389 1.46 The HLA VAL Section 389 1.47 Modifying VAL Objects at Arbitrary Points in Your Programs 390 1.5 The HLA TYPE Section 391 1.6 ENUM and HLA Enumerated Data Types 392 1.7 Pointer Data Types 393 1.71 Using Pointers in Assembly Language 394 1.72 Declaring Pointers in HLA 395 1.73 Pointer Constants and Pointer Constant Expressions 395 1.74 Pointer Variables and Dynamic Memory Allocation 396 1.75 Common Pointer Problems 397 1.8 Putting It All Together 400 2.1 Chapter Overview 401 2.2 Composite Data Types 401 2.3 Character Strings 401 2.4 HLA Strings 403 2.5 Accessing the Characters Within a String 407 2.6 The HLA String Module and Other String-Related Routines 409 2.7 In-Memory Conversions 419 2.8 Putting It All Together 420 3.1 Chapter Overview 421 3.2 The HLA Standard Library CHARSHHF

Module 421 3.3 Character Sets 423 3.4 Character Set Implementation in HLA 424 3.5 HLA Character Set Constants and Character Set Expressions 425 3.6 The IN Operator in HLA HLL Boolean Expressions 426 3.7 Character Set Support in the HLA Standard Library 427 3.8 Using Character Sets in Your HLA Programs 429 3.9 Low-level Implementation of Set Operations 431 3.91 Character Set Functions That Build Sets 431 3.92 Traditional Set Operations 437 3.93 Testing Character Sets 440 3.10 Putting It All Together 443 4.1 Chapter Overview 445 4.2 Arrays 445 4.3 Declaring Arrays in Your HLA Programs 446 4.4 HLA Array Constants 446 4.5 Accessing Elements of a Single Dimension Array 447 4.51 Sorting an Array of Values 449 Beta Draft - Do not distribute 2001, By Randall Hyde Page 7 AoATOC.fm 4.6 Multidimensional Arrays 450 4.61 Row Major Ordering 451 4.62 Column Major Ordering 454 4.7 Allocating Storage for Multidimensional Arrays 455 4.8 Accessing Multidimensional Array

Elements in Assembly Language 457 4.9 Large Arrays and MASM 458 4.10 Dynamic Arrays in Assembly Language 458 4.11 HLA Standard Library Array Support 460 4.12 Putting It All Together 462 5.1 Chapter Overview 465 5.2 Records . 465 5.3 Record Constants 467 5.4 Arrays of Records 468 5.5 Arrays/Records as Record Fields . 468 5.6 Controlling Field Offsets Within a Record 471 5.7 Aligning Fields Within a Record 472 5.8 Pointers to Records 473 5.9 Unions 474 5.10 Anonymous Unions 476 5.11 Variant Types 477 5.12 Namespaces 477 5.13 Putting It All Together 480 6.1 Chapter Overview 481 6.2 Dates 481 6.3 A Brief History of the Calendar 482 6.4 HLA Date Functions 485 6.41 dateIsValid and datevalidate 485 6.42 Checking for Leap Years 486 6.43 Obtaining the System Date 489 6.44 Date to String Conversions and Date Output 489 6.45 dateunpack and datapack 491 6.46 dateJulian, datefromJulian 492 6.47 datedatePlusDays, datedatePlusMonths, and datedaysBetween 492 6.48

datedayNumber, datedaysLeft, and datedayOfWeek 493 6.5 Times 493 6.51 timecurTime 494 6.52 timehmsToSecs and timesecstoHMS 494 6.53 Time Input/Output 495 6.6 Putting It All Together 496 7.1 Chapter Overview 497 7.2 File Organization 497 7.21 Files as Lists of Records 497 7.22 Binary vs Text Files 498 Page 8 2001, By Randall Hyde Beta Draft - Do not distribute Hello, World of Assembly Language 7.3 Sequential Files 500 7.4 Random Access Files 506 7.5 ISAM (Indexed Sequential Access Method) Files 510 7.6 Truncating a File 512 7.7 File Utility Routines 514 7.71 Copying, Moving, and Renaming Files 514 7.72 Computing the File Size 516 7.73 Deleting Files 517 7.8 Directory Operations 518 7.9 Putting It All Together 518 8.1 Chapter Overview 521 8.2 Procedures 521 8.3 Saving the State of the Machine 523 8.4 Prematurely Returning from a Procedure 526 8.5 Local Variables 527 8.6 Other Local and Global Symbol Types 531 8.7 Parameters 532 8.71 Pass by Value 532

8.72 Pass by Reference 535 8.8 Functions and Function Results 537 8.81 Returning Function Results 537 8.82 Instruction Composition in HLA 538 8.83 The HLA RETURNS Option in Procedures 540 8.9 Side Effects 542 8.10 Recursion 543 8.11 Forward Procedures 546 8.12 Putting It All Together 547 9.1 Chapter Overview 549 9.2 Managing Large Programs 549 9.3 The #INCLUDE Directive 549 9.4 Ignoring Duplicate Include Operations 551 9.5 UNITs and the EXTERNAL Directive 551 9.51 Behavior of the EXTERNAL Directive 555 9.52 Header Files in HLA 556 9.6 Make Files 557 9.7 Code Reuse 560 9.8 Creating and Managing Libraries 561 9.9 Name Space Pollution 563 9.10 Putting It All Together 564 10.1 Chapter Overview 567 10.2 80x86 Integer Arithmetic Instructions 567 10.21 The MUL and IMUL Instructions 567 Beta Draft - Do not distribute 2001, By Randall Hyde Page 9 AoATOC.fm 10.22 The DIV and IDIV Instructions 569 10.23 The CMP Instruction 572 10.24 The SETcc Instructions 573

10.25 The TEST Instruction 576 10.3 Arithmetic Expressions 577 10.31 Simple Assignments 577 10.32 Simple Expressions 578 10.33 Complex Expressions 579 10.34 Commutative Operators 583 10.4 Logical (Boolean) Expressions 584 10.5 Machine and Arithmetic Idioms 586 10.51 Multiplying without MUL, IMUL, or INTMUL 586 10.52 Division Without DIV or IDIV 587 10.53 Implementing Modulo-N Counters with AND 587 10.54 Careless Use of Machine Idioms 588 10.6 The HLA (Pseudo) Random Number Unit 588 10.7 Putting It All Together 590 11.1 Chapter Overview 591 11.2 Floating Point Arithmetic 591 11.21 FPU Registers 591 11.211 FPU Data Registers 592 11.212 The FPU Control Register 592 11.213 The FPU Status Register 595 11.22 FPU Data Types 598 11.23 The FPU Instruction Set 599 11.24 FPU Data Movement Instructions 599 11.241 The FLD Instruction 599 11.242 The FST and FSTP Instructions 600 11.243 The FXCH Instruction 601 11.25 Conversions 601 11.251 The FILD Instruction 601 11.252

The FIST and FISTP Instructions 602 11.253 The FBLD and FBSTP Instructions 602 11.26 Arithmetic Instructions 603 11.261 The FADD and FADDP Instructions 603 11.262 The FSUB, FSUBP, FSUBR, and FSUBRP Instructions 603 11.263 The FMUL and FMULP Instructions 604 11.264 The FDIV, FDIVP, FDIVR, and FDIVRP Instructions 605 11.265 The FSQRT Instruction 605 11.266 The FPREM and FPREM1 Instructions 606 11.267 The FRNDINT Instruction 606 11.268 The FABS Instruction 607 11.269 The FCHS Instruction 607 11.27 Comparison Instructions 607 11.271 The FCOM, FCOMP, and FCOMPP Instructions 608 11.272 The FTST Instruction 609 11.28 Constant Instructions . 609 11.29 Transcendental Instructions 609 11.291 The F2XM1 Instruction 609 Page 10 2001, By Randall Hyde Beta Draft - Do not distribute Hello, World of Assembly Language 11.292 The FSIN, FCOS, and FSINCOS Instructions . 610 11.293 The FPTAN Instruction 610 11.294 The FPATAN Instruction 610 11.295 The FYL2X Instruction 610 11.296

The FYL2XP1 Instruction 610 11.210 Miscellaneous instructions 611 11.2101 The FINIT and FNINIT Instructions 611 11.2102 The FLDCW and FSTCW Instructions 611 11.2103 The FCLEX and FNCLEX Instructions 611 11.2104 The FSTSW and FNSTSW Instructions 612 11.211 Integer Operations . 612 11.3 Converting Floating Point Expressions to Assembly Language 612 11.31 Converting Arithmetic Expressions to Postfix Notation 613 11.32 Converting Postfix Notation to Assembly Language 615 11.33 Mixed Integer and Floating Point Arithmetic 616 11.4 HLA Standard Library Support for Floating Point Arithmetic 617 11.41 The stdingetf and fileiogetf Functions 617 11.42 Trigonometric Functions in the HLA Math Library 617 11.43 Exponential and Logarithmic Functions in the HLA Math Library 618 11.5 Sample Program 619 11.6 Putting It All Together 624 12.1 Chapter Overview 625 12.2 Tables 625 12.21 Function Computation via Table Look-up 625 12.22 Domain Conditioning 628 12.23 Generating Tables 629

12.3 High Performance Implementation of csrangeChar 632 13.1 Questions 641 13.2 Programming Projects 648 13.3 Laboratory Exercises 655 13.31 Using the BOUND Instruction to Check Array Indices 655 13.32 Using TEXT Constants in Your Programs 658 13.33 Constant Expressions Lab Exercise 660 13.34 Pointers and Pointer Constants Exercises 662 13.35 String Exercises 663 13.36 String and Character Set Exercises 665 13.37 Console Array Exercise 669 13.38 Multidimensional Array Exercises 671 13.39 Console Attributes Laboratory Exercise 674 13.310 Records, Arrays, and Pointers Laboratory Exercise 676 13.311 Separate Compilation Exercises 682 13.312 The HLA (Pseudo) Random Number Unit 688 13.313 File I/O in HLA 689 13.314 Timing Various Arithmetic Instructions 690 13.315 Using the RDTSC Instruction to Time a Code Sequence 693 13.316 Timing Floating Point Instructions 697 13.317 Table Lookup Exercise 700 1.1 Chapter Overview 705 Beta Draft - Do not distribute 2001, By

Randall Hyde Page 11 AoATOC.fm 1.2 Conjunction, Disjunction, and Negation in Boolean Expressions 705 1.3 TRYENDTRY 707 1.31 Nesting TRYENDTRY Statements 708 1.32 The UNPROTECTED Clause in a TRYENDTRY Statement 710 1.33 The ANYEXCEPTION Clause in a TRYENDTRY Statement 713 1.34 Raising User-Defined Exceptions 713 1.35 Reraising Exceptions in a TRYENDTRY Statement 715 1.36 A List of the Predefined HLA Exceptions 715 1.37 How to Handle Exceptions in Your Programs 715 1.38 Registers and the TRYENDTRY Statement 717 1.4 BEGINEXITEXITIFEND 718 1.5 CONTINUECONTINUEIF 723 1.6 SWITCHCASEDEFAULTENDSWITCH 725 1.7 Putting It All Together 727 2.1 Chapter Overview 729 2.2 Low Level Control Structures 729 2.3 Statement Labels 729 2.4 Unconditional Transfer of Control (JMP) 731 2.5 The Conditional Jump Instructions 733 2.6 “Medium-Level” Control Structures: JT and JF 736 2.7 Implementing Common Control Structures in Assembly Language 736 2.8 Introduction to Decisions 736

2.81 IFTHENELSE Sequences 738 2.82 Translating HLA IF Statements into Pure Assembly Language 741 2.83 Implementing Complex IF Statements Using Complete Boolean Evaluation 745 2.84 Short Circuit Boolean Evaluation 746 2.85 Short Circuit vs Complete Boolean Evaluation 747 2.86 Efficient Implementation of IF Statements in Assembly Language 749 2.87 SWITCH/CASE Statements 752 2.9 State Machines and Indirect Jumps . 761 2.10 Spaghetti Code 763 2.11 Loops 763 2.111 While Loops 764 2.112 RepeatUntil Loops 765 2.113 FOREVERENDFOR Loops 766 2.114 FOR Loops 766 2.115 The BREAK and CONTINUE Statements 767 2.116 Register Usage and Loops 771 2.12 Performance Improvements 772 2.121 Moving the Termination Condition to the End of a Loop 772 2.122 Executing the Loop Backwards 774 2.123 Loop Invariant Computations 775 2.124 Unraveling Loops 776 2.125 Induction Variables 777 2.13 Hybrid Control Structures in HLA 778 Page 12 2001, By Randall Hyde Beta Draft - Do not distribute

Hello, World of Assembly Language 2.14 Putting It All Together 780 3.1 Chapter Overview 781 3.2 Procedures and the CALL Instruction 781 3.3 Procedures and the Stack 783 3.4 Activation Records 786 3.5 The Standard Entry Sequence 789 3.6 The Standard Exit Sequence 790 3.7 HLA Local Variables 791 3.8 Parameters 792 3.81 Pass by Value 793 3.82 Pass by Reference 793 3.83 Passing Parameters in Registers 794 3.84 Passing Parameters in the Code Stream 796 3.85 Passing Parameters on the Stack 798 3.851 Accessing Value Parameters on the Stack 800 3.852 Passing Value Parameters on the Stack 801 3.853 Accessing Reference Parameters on the Stack 806 3.854 Passing Reference Parameters on the Stack 808 3.855 Passing Formal Parameters as Actual Parameters 811 3.856 HLA Hybrid Parameter Passing Facilities 812 3.857 Mixing Register and Stack Based Parameters 814 3.9 Procedure Pointers 814 3.10 Procedural Parameters 816 3.11 Untyped Reference Parameters 817 3.12 Iterators and

the FOREACH Loop 818 3.13 Sample Programs 820 3.131 Generating the Fibonacci Sequence Using an Iterator 820 3.132 Outer Product Computation with Procedural Parameters 822 3.14 Putting It All Together 825 4.1 Chapter Overview 827 4.2 Multiprecision Operations 827 4.21 Multiprecision Addition Operations . 827 4.22 Multiprecision Subtraction Operations 830 4.23 Extended Precision Comparisons 831 4.24 Extended Precision Multiplication 834 4.25 Extended Precision Division 838 4.26 Extended Precision NEG Operations 846 4.27 Extended Precision AND Operations 847 4.28 Extended Precision OR Operations 848 4.29 Extended Precision XOR Operations 848 4.210 Extended Precision NOT Operations 848 4.211 Extended Precision Shift Operations 848 4.212 Extended Precision Rotate Operations 852 4.213 Extended Precision I/O 852 4.2131 Extended Precision Hexadecimal Output 853 4.2132 Extended Precision Unsigned Decimal Output 853 Beta Draft - Do not distribute 2001, By Randall Hyde

Page 13 AoATOC.fm 4.2133 Extended Precision Signed Decimal Output 856 4.2134 Extended Precision Formatted I/O 857 4.2135 Extended Precision Input Routines 858 4.2136 Extended Precision Hexadecimal Input 861 4.2137 Extended Precision Unsigned Decimal Input 865 4.2138 Extended Precision Signed Decimal Input 869 4.3 Operating on Different Sized Operands 869 4.4 Decimal Arithmetic 870 4.41 Literal BCD Constants 872 4.42 The 80x86 DAA and DAS Instructions 872 4.43 The 80x86 AAA, AAS, AAM, and AAD Instructions 873 4.44 Packed Decimal Arithmetic Using the FPU 874 4.5 Sample Program 876 4.6 Putting It All Together 880 5.1 Chapter Overview 881 5.2 What is Bit Data, Anyway? 881 5.3 Instructions That Manipulate Bits 882 5.4 The Carry Flag as a Bit Accumulator 888 5.5 Packing and Unpacking Bit Strings 889 5.6 Coalescing Bit Sets and Distributing Bit Strings 892 5.7 Packed Arrays of Bit Strings 893 5.8 Searching for a Bit 895 5.9 Counting Bits 897 5.10 Reversing a Bit

String 899 5.11 Merging Bit Strings 901 5.12 Extracting Bit Strings 901 5.13 Searching for a Bit Pattern 903 5.14 The HLA Standard Library Bits Module 904 5.15 Putting It All Together 905 6.1 Chapter Overview 907 6.2 The 80x86 String Instructions 907 6.21 How the String Instructions Operate 908 6.22 The REP/REPE/REPZ and REPNZ/REPNE Prefixes 908 6.23 The Direction Flag 909 6.24 The MOVS Instruction 910 6.25 The CMPS Instruction 915 6.26 The SCAS Instruction 918 6.27 The STOS Instruction 918 6.28 The LODS Instruction 919 6.29 Building Complex String Functions from LODS and STOS 919 6.3 Putting It All Together 920 6.1 Chapter Overview 921 6.2 Introduction to the Compile-Time Language (CTL) 921 6.3 The #PRINT and #ERROR Statements 922 Page 14 2001, By Randall Hyde Beta Draft - Do not distribute Hello, World of Assembly Language 6.4 Compile-Time Constants and Variables 924 6.5 Compile-Time Expressions and Operators 924 6.6 Compile-Time Functions 927 6.61

Type Conversion Compile-time Functions 928 6.62 Numeric Compile-Time Functions 928 6.63 Character Classification Compile-Time Functions 929 6.64 Compile-Time String Functions 929 6.65 Compile-Time Pattern Matching Functions 929 6.66 Compile-Time Symbol Information 930 6.67 Compile-Time Expression Classification Functions 931 6.68 Miscellaneous Compile-Time Functions 932 6.69 Predefined Compile-Time Variables 932 6.610 Compile-Time Type Conversions of TEXT Objects 933 6.7 Conditional Compilation (Compile-Time Decisions) 934 6.8 Repetitive Compilation (Compile-Time Loops) 937 6.9 Putting It All Together 939 7.1 Chapter Overview 941 7.2 Macros (Compile-Time Procedures) 941 7.21 Standard Macros 941 7.22 Macro Parameters 943 7.221 Standard Macro Parameter Expansion 943 7.222 Macros with a Variable Number of Parameters 946 7.223 Required Versus Optional Macro Parameters 947 7.224 The "#(" and ")#" Macro Parameter Brackets 948 7.225 Eager vs Deferred

Macro Parameter Evaluation 949 7.23 Local Symbols in a Macro 952 7.24 Macros as Compile-Time Procedures 957 7.25 Multi-part (Context-Free) Macros 957 7.26 Simulating Function Overloading with Macros 962 7.3 Writing Compile-Time "Programs" 967 7.31 Constructing Data Tables at Compile Time 968 7.32 Unrolling Loops 971 7.4 Using Macros in Different Source Files 973 7.5 Putting It All Together 973 9.1 Chapter Overview 975 9.2 Introduction to DSELs in HLA 975 9.21 Implementing the Standard HLA Control Structures 975 9.211 The FOREVER Loop 976 9.212 The WHILE Loop 979 9.213 The IF Statement 981 9.22 The HLA SWITCH/CASE Statement 987 9.23 A Modified WHILE Loop 998 9.24 A Modified IFELSEENDIF Statement 1002 9.3 Sample Program: A Simple Expression Compiler 1007 9.4 Putting It All Together 1028 10.1 Chapter Overview 1029 Beta Draft - Do not distribute 2001, By Randall Hyde Page 15 AoATOC.fm 10.2 General Principles 1029 10.3 Classes in HLA 1031 10.4

Objects 1033 10.5 Inheritance 1034 10.6 Overriding 1035 10.7 Virtual Methods vs Static Procedures 1036 10.8 Writing Class Methods, Iterators, and Procedures 1037 10.9 Object Implementation 1040 10.91 Virtual Method Tables 1043 10.92 Object Representation with Inheritance 1045 10.10 Constructors and Object Initialization 1048 10.101 Dynamic Object Allocation Within the Constructor 1049 10.102 Constructors and Inheritance 1051 10.103 Constructor Parameters and Procedure Overloading 1054 10.11 Destructors 1055 10.12 HLA’s “ initialize ” and “ finalize ” Strings 1055 10.13 Abstract Methods 1060 10.14 Run-time Type Information (RTTI) 1062 10.15 Calling Base Class Methods 1064 10.16 Sample Program 1064 10.17 Putting It All Together 1081 11.1 Chapter Overview 1083 11.2 Determining if a CPU Supports the MMX Instruction Set 1083 11.3 The MMX Programming Environment 1084 11.31 The MMX Registers 1084 11.32 The MMX Data Types 1086 11.4 The Purpose of the MMX

Instruction Set 1087 11.5 Saturation Arithmetic and Wraparound Mode 1087 11.6 MMX Instruction Operands 1088 11.7 MMX Technology Instructions 1092 11.71 MMX Data Transfer Instructions 1093 11.72 MMX Conversion Instructions 1093 11.73 MMX Packed Arithmetic Instructions 1100 11.74 MMX Logic Instructions 1102 11.75 MMX Comparison Instructions 1103 11.76 MMX Shift Instructions 1107 11.8 The EMMS Instruction 1108 11.9 The MMX Programming Paradigm 1109 11.10 Putting It All Together 1117 12.1 Chapter Overview 1119 12.2 Mixing HLA and MASM Code in the Same Program 1119 12.21 In-Line (MASM) Assembly Code in Your HLA Programs 1119 12.22 Linking MASM-Assembled Modules with HLA Modules 1122 Page 16 2001, By Randall Hyde Beta Draft - Do not distribute Hello, World of Assembly Language 12.3 Programming in Delphi and HLA 1125 12.31 Linking HLA Modules With Delphi Programs 1126 12.32 Register Preservation 1128 12.33 Function Results 1129 12.34 Calling Conventions 1135

12.35 Pass by Value, Reference, CONST, and OUT in Delphi 1139 12.36 Scalar Data Type Correspondence Between Delphi and HLA 1140 12.37 Passing String Data Between Delphi and HLA Code 1142 12.38 Passing Record Data Between HLA and Delphi 1144 12.39 Passing Set Data Between Delphi and HLA 1148 12.310 Passing Array Data Between HLA and Delphi 1148 12.311 Delphi Limitations When Linking with (Non-TASM) Assembly Code 1148 12.312 Referencing Delphi Objects from HLA Code 1149 12.4 Programming in C/C++ and HLA 1151 12.41 Linking HLA Modules With C/C++ Programs 1152 12.42 Register Preservation 1155 12.43 Function Results 1155 12.44 Calling Conventions 1155 12.45 Pass by Value and Reference in C/C++ 1158 12.46 Scalar Data Type Correspondence Between Delphi and HLA 1158 12.47 Passing String Data Between C/C++ and HLA Code 1160 12.48 Passing Record/Structure Data Between HLA and C/C++ 1160 12.49 Passing Array Data Between HLA and C/C++ 1161 12.5 Putting It All Together 1162 13.1

Questions 1163 13.2 Programming Problems 1171 13.3 Laboratory Exercises 1180 13.31 Dynamically Nested TRYENDTRY Statements 1181 13.32 The TRYENDTRY Unprotected Section 1182 13.33 Performance of SWITCH Statement 1183 13.34 Complete Versus Short Circuit Boolean Evaluation 1187 13.35 Conversion of High Level Language Statements to Pure Assembly 1190 13.36 Activation Record Exercises 1190 13.361 Automatic Activation Record Generation and Access 1190 13.362 The vars and parms Constants 1192 13.363 Manually Constructing an Activation Record 1194 13.37 Reference Parameter Exercise 1196 13.38 Procedural Parameter Exercise 1199 13.39 Iterator Exercises 1202 13.310 Performance of Multiprecision Multiplication and Division Operations 1205 13.311 Performance of the Extended Precision NEG Operation 1205 13.312 Testing the Extended Precision Input Routines 1206 13.313 Illegal Decimal Operations 1206 13.314 MOVS Performance Exercise #1 1206 13.315 MOVS Performance Exercise #2

1208 13.316 Memory Performance Exercise 1210 13.317 The Performance of Length-Prefixed vs Zero-Terminated Strings 1211 13.318 Introduction to Compile-Time Programs 1217 13.319 Conditional Compilation and Debug Code 1218 13.320 The Assert Macro 1220 Beta Draft - Do not distribute 2001, By Randall Hyde Page 17 AoATOC.fm 13.321 Demonstration of Compile-Time Loops (#while) 1222 13.322 Writing a Trace Macro 1224 13.323 Overloading 1226 13.324 Multi-part Macros and RatASM (Rational Assembly) 1229 13.325 Virtual Methods vs Static Procedures in a Class 1232 13.326 Using the initialize and finalize Strings in a Program 1235 13.327 Using RTTI in a Program 1237 1.1 Chapter Overview 1247 1.2 First Class Objects 1247 1.3 Thunks 1249 1.4 Initializing Thunks 1250 1.5 Manipulating Thunks 1251 1.51 Assigning Thunks 1251 1.52 Comparing Thunks 1252 1.53 Passing Thunks as Parameters 1252 1.54 Returning Thunks as Function Results 1254 1.6 Activation Record Lifetimes and

Thunks 1256 1.7 Comparing Thunks and Objects 1257 1.8 An Example of a Thunk Using the Fibonacci Function 1257 1.9 Thunks and Artificial Intelligence Code 1262 1.10 Thunks as Triggers 1263 1.11 Jumping Out of a Thunk 1267 1.12 Handling Exceptions with Thunks 1269 1.13 Using Thunks in an Appropriate Manner 1270 1.14 Putting It All Together 1270 2.1 Chapter Overview 1271 2.2 Iterators 1271 2.21 Implementing Iterators Using In-Line Expansion 1273 2.22 Implementing Iterators with Resume Frames 1274 2.3 Other Possible Iterator Implementations 1279 2.4 Breaking Out of a FOREACH Loop 1282 2.5 An Iterator Implementation of the Fibonacci Number Generator 1282 2.6 Iterators and Recursion 1289 2.7 Calling Other Procedures Within an Iterator 1292 2.8 Iterators Within Classes 1292 2.9 Putting It Altogether 1292 3.1 Chapter Overview 1293 3.2 Coroutines 1293 3.3 Parameters and Register Values in Coroutine Calls 1298 3.4 Recursion, Reentrancy, and Variables 1299 3.5 Generators

1301 3.6 Exceptions and Coroutines 1304 Page 18 2001, By Randall Hyde Beta Draft - Do not distribute Hello, World of Assembly Language 3.7 Putting It All Together 1304 4.1 Chapter Overview 1305 4.2 Parameters 1305 4.3 Where You Can Pass Parameters 1305 4.31 Passing Parameters in (Integer) Registers 1306 4.32 Passing Parameters in FPU and MMX Registers 1309 4.33 Passing Parameters in Global Variables 1310 4.34 Passing Parameters on the Stack 1310 4.35 Passing Parameters in the Code Stream 1315 4.36 Passing Parameters via a Parameter Block 1317 4.4 How You Can Pass Parameters 1318 4.41 Pass by Value-Result 1318 4.42 Pass by Result 1323 4.43 Pass by Name 1324 4.44 Pass by Lazy-Evaluation 1326 4.5 Passing Parameters as Parameters to Another Procedure 1327 4.51 Passing Reference Parameters to Other Procedures 1327 4.52 Passing Value-Result and Result Parameters as Parameters 1328 4.53 Passing Name Parameters to Other Procedures 1329 4.54 Passing Lazy Evaluation

Parameters as Parameters 1330 4.55 Parameter Passing Summary 1330 4.6 Variable Parameter Lists 1331 4.7 Function Results 1333 4.71 Returning Function Results in a Register 1333 4.72 Returning Function Results on the Stack 1334 4.73 Returning Function Results in Memory Locations 1334 4.74 Returning Large Function Results 1335 4.8 Putting It All Together 1335 5.1 Chapter Overview 1337 5.2 Lexical Nesting, Static Links, and Displays 1337 5.21 Scope 1337 5.22 Unit Activation, Address Binding, and Variable Lifetime . 1338 5.23 Static Links 1339 5.24 Accessing Non-Local Variables Using Static Links 1343 5.25 Nesting Procedures in HLA 1345 5.26 The Display 1349 5.27 The 80x86 ENTER and LEAVE Instructions 1352 5.3 Passing Variables at Different Lex Levels as Parameters 1355 5.31 Passing Parameters by Value 1355 5.32 Passing Parameters by Reference, Result, and Value-Result . 1356 5.33 Passing Parameters by Name and Lazy-Evaluation in a Block Structured Language 1357 5.4

Passing Procedures as Parameters 1357 5.5 Faking Intermediate Variable Access 1357 5.6 Putting It All Together 1358 6.1 Questions 1359 Beta Draft - Do not distribute 2001, By Randall Hyde Page 19 AoATOC.fm 6.2 Programming Problems 1362 6.3 Laboratory Exercises 1363 1.1 Introduction 1371 1.11 Intended Audience 1371 1.12 Readability Metrics 1371 1.13 How to Achieve Readability 1372 1.14 How This Document is Organized 1373 1.15 Guidelines, Rules, Enforced Rules, and Exceptions 1373 1.16 Source Language Concerns 1374 1.2 Program Organization 1374 1.21 Library Functions 1374 1.22 Common Object Modules 1375 1.23 Local Modules 1375 1.24 Program Make Files 1376 1.3 Module Organization 1377 1.31 Module Attributes 1377 1.311 Module Cohesion 1377 1.312 Module Coupling 1378 1.313 Physical Organization of Modules 1378 1.314 Module Interface 1379 1.4 Program Unit Organization 1380 1.41 Routine Cohesion 1380 1.42 Routine Coupling 1381 1.43 Routine Size 1381 1.5

Statement Organization 1382 1.51 Writing “Pure” Assembly Code 1382 1.52 Using HLA’s High Level Control Statements 1384 1.6 Comments 1389 1.61 What is a Bad Comment? 1390 1.62 What is a Good Comment? 1391 1.63 Endline vs Standalone Comments 1392 1.64 Unfinished Code 1393 1.65 Cross References in Code to Other Documents 1394 1.7 Names, Instructions, Operators, and Operands 1395 1.71 Names 1395 1.711 Naming Conventions 1397 1.712 Alphabetic Case Considerations 1397 1.713 Abbreviations 1398 1.714 The Position of Components Within an Identifier 1399 1.715 Names to Avoid 1400 1.716 Special Identifers 1401 1.72 Instructions, Directives, and Pseudo-Opcodes 1402 1.721 Choosing the Best Instruction Sequence 1402 1.722 Control Structures 1403 1.723 Instruction Synonyms 1405 1.8 Data Types 1407 1.81 Declaring Structures in Assembly Language 1407 Page 20 2001, By Randall Hyde Beta Draft - Do not distribute Hello, World of Assembly Language H.1 Conversion

Functions 1447 H.2 Numeric Functions 1449 H.3 Date/Time Functions 1450 H.4 Classification Functions 1451 H.5 String and Character Set Functions 1452 H.6 Pattern Matching Functions 1455 H.61 String/Cset Pattern Matching Functions 1456 H.62 String/Character Pattern Matching Functions 1460 H.63 String/Case Insenstive Character Pattern Matching Functions 1464 H.64 String/String Pattern Matching Functions 1465 H.65 String/Misc Pattern Matching Functions 1466 H.7 HLA Information and Symbol Table Functions 1469 H.8 Compile-Time Variables 1474 H.9 Miscellaneous Compile-Time Functions 1475 I.1 What’s Included in the HLA Distribution Package 1479 I.2 Using the HLA Compiler 1480 I.3 Compiling Your First Program 1480 I.4 Win 2000 Installation Notes Taken from complangasmx86 1481 I.41 To Install HLA 1481 I.42 SETTING UP UEDIT32 1482 I.43 Wordfiletxt Contents (for UEDIT) 1484 1.1 The @TRACE Pseudo-Variable 1501 1.2 The Assert Macro 1504 1.3 RATASM 1504 1.4 The HLA Standard

Library DEBUG Module 1504 L.1 The HLA Standard Library 1507 L.2 Compiling to MASM Code -- The Final Word 1508 L.3 The HLA ifthenendif Statement, Part I 1513 L.4 Boolean Expressions in HLA Control Structures 1514 L.5 The JT/JF Pseudo-Instructions 1520 L.6 The HLA ifthenelseifelseendif Statement, Part II 1520 L.7 The While Statement 1524 L.8 repeatuntil 1526 L.9 forendfor 1526 L.10 foreverendfor 1526 L.11 break, breakif 1526 L.12 continue, continueif 1526 L.13 beginend, exit, exitif 1526 L.14 foreachendfor 1526 L.15 tryunprotectexceptionanyexceptionendtry, raise 1526 Beta Draft - Do not distribute 2001, By Randall Hyde Page 21 AoATOC.fm Page 22 2001, By Randall Hyde Beta Draft - Do not distribute Volume One: Data Representation Chapter One: Foreword An introduction to this text and the purpose behind this text. Chapter Two: Hello, World of Assembly Language A brief introduction to assembly language programming using the HLA language. Chapter Three: Data

Representation A discussion of numeric representation on the computer. Chapter Four: More Data Representation Advanced numeric and non-numeric computer data representation. Chapter Five: Questions, Projects, and Laboratory Exercises Test what you’ve learned in the previous chapters! Volume One: These five chapters are appropriate for all courses teaching maching organization and assembly language programming. Data Representation Volume 1 Page 2 2001, By Randall Hyde Beta Draft - Do not distribute Foreward Chapter One Nearly every text has a throw-away chapter as Chapter One. Here’s my version Seriously, though, some important copyright, instructional, and support information appears in this chapter. So you’ll probably want to read this stuff. Instructors will definitely want to review this material • Foreward to the HLA Version of “The Art of Assembly.” In 1987 I began work on a text I entitled “How to Program the IBM PC, Using 8088 Assembly

Language.” First, the 8088 faded into history, shortly thereafter the phrase “IBM PC” and even “IBM PC Compatible” became far less dominate in the industry, so I retitled the text “The Art of Assembly Language Programming.” I used this text in my courses at Cal Poly Pomona and UC Riverside for many years, getting good reviews on the text (not to mention lots of suggestions and corrections). Sometime around 1994-1995, I converted the text to HTML and posted an electronic version on the Internet. The rest, as they say is history A week doesn’t go by that I don’t get several emails praising me for releasing such a fine text on the Internet. Indeed, I only hear three really big complaints about the text: (1) It’s a University textbook and some people don’t like to read textbooks, (2) It’s 16-bit DOS-based, and (3) there isn’t a print version of the text. Well, I make no apologies for complaint #1. The whole reason I wrote the text was to support my courses at Cal

Poly and UC Riverside. Complaint #2 is quite valid, that’s why I wrote this version of the text As for complaint #3, it was really never cost effective to create a print version; publishers simply cannot justify printing a text 1,500 pages long with a limited market. Furthermore, having a print version would prevent me from updating the text at will for my courses The astute reader will note that I haven’t updated the electronic version of “The Art of Assembly Language Programming” (or “AoA”) since about 1996. If the whole reason for keeping the book in electronic form has been to make updating the text easy, why haven’t there been any updates? Well, the story is very similar to Knuth’s “The Art of Computer Programming” series: I was sidetracked by other projects1. The static nature of AoA over the past several years was never really intended. During the 1995-1996 time frame, I decided it was time to make a major revision to AoA. The first version of AoA was MS-DOS

based and by 1995 it was clear that MS-DOS was finally becoming obsolete; almost everyone except a few die-hards had switched over to Windows. So I knew that AoA needed an update for Windows, if nothing else. I also took some time to evaluate my curriculum to see if I couldn’t improve the pedagogical (teaching) material to make it possible for my students to learn even more about 80x86 assembly language in a relatively short 10-week quarter. One thing I’ve learned after teaching an assembly language course for over a decade is that support software makes all the difference in the world to students writing their first assembly language programs. When I first began teaching assembly language, my students had to write all their own I/O routines (including numeric to string conversions for numeric I/O). While one could argue that there is some value to having students write this code for themselves, I quickly discovered that they spent a large percentage of their project time over the

quarter writing I/O routines. Each moment they spent writing these relatively low-level routines was one less moment available to them for learning more advanced assembly language programming techniques. While, I repeat, there is some value to learning how to write this type of code, it’s not all that related to assembly language programming (after all, the same type of problem has to be solved for any language that allows numeric I/O). I wanted to free the students from this drudgery so they could learn more about assembly language programming. The result of this observation was “The UCR Standard Library for 80x86 Assembly Language Programmers.” This is a library containing several hundred I/O and utility functions that students could use in their assembly language programs. More than 1. Actually, another problem is the effort needed to maintain the HTML version since it was a manual conversion from Adobe Framemaker. But that’s another story Page 3 Chapter One Volume 1

nearly anything else, the UCR Standard Library improved the progress students made in my courses. It should come as no surprise, then, that one of my first projects when rewriting AoA was to create a new, more powerful, version of the UCR Standard Library. This effort (the UCR Stdlib v2.0) ultimately failed (although you can still download the code written for v20 from http://webstercsucredu) The problem was that I was trying to get MASM to do a little bit more than it was capable of and so the project was ultimately doomed. To condense a really long story, I decided that I needed a new assembler. One that was powerful enough to let me write the new Standard Library the way I felt it should be written However, this new assembler should also make it much easier to learn assembly language; that is, it should relieve the students of some of the drudgery of assembly language programming just as the UCR Standard Library had. After three years of part-time effort, the end result was the

“High Level Assembler,” or HLA. HLA is a radical step forward in teaching assembly language. It combines the syntax of a high level language with the low-level programming capabilities of assembly language. Together with the HLA Standard Library, it makes learning and programming assembly language almost as easy as learning and programming a High Level Language like Pascal or C++. Although HLA isn’t the first attempt to create a hybrid high level/low level language, nor is it even the first attempt to create an assembly language with high level language syntax, it’s certainly the first complete system (with library and operating system support) that is suitable for teaching assembly language programming. Recent experiences in my own assembly language courses show that HLA is a major improvement over MASM and other traditional assemblers when teaching machine organization and assembly language programming. The introduction of HLA is bound to raise lots of questions about its

suitability to the task of teaching assembly language programming (as well it should). Today, the primary purpose of teaching assembly language programming at the University level isn’t to produce a legion of assembly language programmers; it’s to teach machine organization and introduce students to machine architecture. Few instructors realistically expect more than about 5% of their students to wind up working in assembly language as their primary programming language2 Doesn’t turning assembly language into a high level language defeat the whole purpose of the course? Well, if HLA let you write C/C++ or Pascal programs and attempted to call these programs “assembly language” then the answer would be “Yes, this defeats the purpose of the course.” However, despite the name and the high level (and very high level) features present in HLA, HLA is still assembly language. An HLA programmer still uses 80x86 machine instructions to accomplish most of the work. And those high

level language statements that HLA provides are purely optional; the “purist” can use nothing but 80x86 assembly language, ignoring the high level statements that HLA provides. Those who argue that HLA is not true assembly language should note that Microsoft’s MASM and Inprise’s TASM both provide many of the high level control structures found in HLA3. Perhaps the largest deviation from traditional assemblers that HLA makes is in the declaration of variables and data in a program. HLA uses a very Pascal-like syntax for variable, constant, type, and procedure declarations. However, this does not diminish the fact that HLA is an assembly language After all, at the machine language (vs assembly language) level, there is no such thing as a data declaration. Therefore, any syntax for data declaration is an abstraction of data representation in memory. I personally chose to use a syntax that would prove more familiar to my students than the traditional data declarations used by

assemblers. Indeed, perhaps the principle driving force in HLA’s design has been to leverage the student’s existing knowledge when teaching them assembly language. Keep in mind, when a student first learns assembly language programming, there is so much more for them to learn than a handful of 80x86 machine instructions and the machine language programming paradigm. They’ve got to learn assembler directives, how to declare variables, how to write and call procedures, how to comment their code, what constitutes good programming style in an assembly language program, etc. 2. My experience suggests that only about 10-20% of my students will ever write any assembly language again once they graduate; less than 5% ever become regular assembly language users. 3. Indeed, in some respects the MASM and TASM HLL control structures are actually higher level than HLA’s I specifically restricted the statements in HLA because I did not want students writing “C/C++ programs with MOV

instructions.” Page 4 2001, By Randall Hyde Beta Draft - Do not distribute Foreward Unfortunately, with most assemblers, these concepts are completely different in assembly language than they are in a language like Pascal or C/C++. For example, the indentation techniques students master in order to write readable code in Pascal just don’t apply to (traditional) assembly language programs. That’s where HLA deviates from traditional assemblers By using a high level syntax, HLA lets students leverage their high level language knowledge to write good readable programs. HLA will not let them avoid learning machine instructions, but it doesn’t force them to learn a whole new set of programming style guidelines, new ways to comment your code, new ways to create identifiers, etc. HLA lets them use the knowledge they already possess in those areas that really have little to do with assembly language programming so they can concentrate on learning the important issues in assembly

language. So let there be no question about it: HLA is an assembly language. It is not a high level language masquerading as an assembler4 However, it is a system that makes learning and using assembly language easier than ever before possible. Some long-time assembly language programmers, and even many instructors, would argue that making a subject easier to learn diminishes the educational content. Students don’t get as much out of a course if they don’t have to work very hard at it. Certainly, students who don’t apply themselves as well aren’t going to learn as much from a course I would certainly agree that if HLA’s only purpose was to make it easier to learn a fixed amount of material in a course, then HLA would have the negative side-effect of reducing what the students learn in their course. However, the real purpose of HLA is to make the educational process more efficient; not so the students spend less time learning a fixed amount of material (although HLA could

certainly achieve this), but to allow the students to learn the same amount of material in less time so they can use the additional time available to them to advance their study of assembly language. Remember what I said earlier about the UCR Standard Library- it’s introduction into my course allowed me to teach even more advanced topics in my course. The same is true, even more so, for HLA Keep in mind, I’ve got ten weeks in a quarter. If using HLA lets me teach the same material in seven weeks that took ten weeks with MASM, I’m not going to dismiss the course after seven weeks. Instead, I’ll use this additional time to cover more advanced topics in assembly language programming. That’s the real benefit to using pedagogical tools like HLA. Of course, once I’ve addressed the concerns of assembly language instructors and long-time assembly language programmers, the need arises to address questions a student might have about HLA. Without question, the number one concern my

students have had is “If I spend all this time learning HLA, will I be able to use this knowledge once I get out of school?” A more blunt way of putting this is “Am I wasting my time learning HLA?” Let me address these questions three ways. First, as pointed out above, most people (instructors and experienced programmers) view learning assembly language as an educational process. Most students will probably never program full-time in assembly language, indeed, few programmers write more than a tiny fraction (less than 1%) of their code in assembly language. One of the main reasons most Universities require their students to take an assembly language course is so they will be familiar with the low-level operation of their machine and so they can appreciate what the compiler is doing for them (and help them to write better HLL code once they realize how the compiler processes HLL statements). HLA is an assembly language and learning HLA will certainly teach you the concepts of

machine organization, the real purpose behind most assembly language courses. The second point to ponder is that learning assembly language consists of two main activities; learning the assembler’s syntax and learning the assembly language programming paradigm (that is, learning to think in assembly language). Of these two, the second activity is, by far, the more difficult HLA, since it uses a high level language-like syntax, simplifies learning the assembly language syntax HLA also simplifies the initial process of learning to program in assembly language by providing a crutch, the HLA high level statements, that allows students to use high level language semantics when writing their first programs. However, HLA does allow students to write “pure” assembly language programs, so a good instructor will ensure that they master the full assembly language programming paradigm before they complete the course. Once a student masters the semantics (ie, the programming paradigm) of

assembly language, learning a new syntax is 4. The C-- language is a good example of a low-level non-assembly language, if you need a comparison Beta Draft - Do not distribute 2001, By Randall Hyde Page 5 Chapter One Volume 1 relatively easy. Therefore, a typical student should be able to pick up MASM in about a week after mastering HLA5. As for the third and final point: to those that would argue that this is still extra effort that isn’t worthwhile, I would simply point out that none of the existing assemblers have more than a cursory level of compatibility. Yes, TASM can assemble most MASM programs, but the reverse is not true And it’s certainly not the case that NASM, A86, GAS, MASM, and TASM let you write interchangeable code. If you master the syntax of one of these assemblers and someone expects you to write code in a different assembler, you’re still faced with the prospect of having to learn the syntax of the new assembler. And that’s going to take you about

a week (assuming the presence of wellwritten documentation) In this respect, HLA is no different than any of the other assemblers Having addressed these concerns you might have, it’s now time to move on and start teaching assembly language programming using HLA. • Intended Audience No single textbook can be all things to all people. This text is no exception I’ve geared this text and the accompanying software to University level students who’ve never previously learned assembly language programming. This is not to say that others cannot benefit from this work; it simply means that as I’ve had to make choices about the presentation, I’ve made choices that should prove most comfortable for this audience I’ve chosen. A secondary audience who could benefit from this presentation is any motivated person that really wants to learn assembly language. Although I assume a certain level of mathematical maturity from the reader (ie, high school algebra), most of the “tough

math” in this textbook is incidental to learning assembly language programming and you can easily skip over it without fear that you’ll miss too much. High school students and those who haven’t seen a school in 40 years have effectively used this text (and its DOS counterpart) to learn assembly language programming. The organzation of this text reflects the diverse audience for which it is intended. For example, in a standard textbook each chapter typically has its own set of questions, programming exercises, and laboratory exercises. Since the primary audience for this text is Univeristy students, such pedagogical material does appear within this text However, recognizing that not everyone who reads this text wants to bother with this material (e.g, downloading it), this text moves such pedagogical material to the end of each volume in the text and places this material in a separate chapter. This is somewhat of an unusual organization, but I feel that University instructors can

easily adapt to this organization and it saves burdening those who aren’t interested in this material. One audience to whom this book is specifically not directed are those persons who are already comfortable programming in 80x86 assembly language. Undoubtedly, there is a lot of material such programmers will find of use in this textbook. However, my experience suggests that those who’ve already learned x86 assembly language with an assembler like MASM, TASM, or NASM rebel at the thought of having to relearn basic assembly language syntax (as they would to have to learn HLA). If you fall into this category, I humbly apologize for not writing a text more to your liking However, my goal has always been to teach those who don’t already know assembly language, not extend the education of those who do. If you happen to fall into this category and you don’t particularly like this text’s presentation, there is some good news: there are dozens of texts on assembly language

programming that use MASM and TASM out there. So you don’t really need this one • Teaching From This Text The first thing any instructor will notice when reviewing this text is that it’s far too large for any reasonable course. That’s because assembly language courses generally come in two flavors: a machine organization course (more hardware oriented) and an assembly language programming course (more software oriented). No text that is “just the right size” is suitable for both types of 5. This is very similar to mastering C after learning C++ Page 6 2001, By Randall Hyde Beta Draft - Do not distribute Foreward classes. Combining the information for both courses, plus advanced information students may need after they finish the course, produces a large text, like this one. If you’re an instructor with a limited schedule for teaching this subject, you’ll have to carefully select the material you choose to present over the time span of your course. To help,

I’ve included some brief notes at the beginning of each Volume in this text that suggests whether a chapter in that Volume is appropriate for a machine organization course, an assembly language programming course, or an advanced assembly programming course. These brief course notes can help you choose which chapters you want to cover in your course. If you would like to offer hard copies of this text in the bookstore for your students, I will attempt to arrange with some “Custom Textbook Publishing” houses to make this material available on an “as-requested” basis. As I work out arrangements with such outfits, I’ll post ordering information on Webster (http://webstercsucredu) If your school has a printing and reprographics department, or you have a local business that handles custom publishing, you can certainly request copyright clearance to print the text locally. If you’re not taking a formal course, just keep in mind that you don’t have to read this text straight

through, chapter by chapter. If you want to learn assembly language programming and some of the machine organization chapters seem a little too hardware oriented for your tastes, feel free to skip those chapters and come back to them later on, when you understand the need to learn this information. • Copyright Notice The full contents of this text is copyrighted material. Here are the rights I hereby grant concerning this material You have the right to • • • Read this text on-line from the http://webster.csucredu web site or any other approved web site. Download an electronic version of this text for your own personal use and view this text on your own personal computer. Make a single printed copy for your own personal use. I usually grant instructors permission to use this text in conjunction with their courses at recognized academic institutions. There are two types of reproduction I allow in this instance: electronic and printed. I grant electronic reproduction rights

for one school term; after which the institution must remove the electronic copy of the text and obtain new permission to repost the electronic form (I require a new copy for each term so that corrections, changes, and additions propagate across the net). If your institution has reproduction facilities, I will grant hard copy reproduction rights for one academic year (for the same reasons as above). You may obtain copyright clearance by emailing me at rhyde@cs.ucredu I will respond with clearance via email. My returned email plus this page should provide sufficient acknowledgement of copyright clearance If, for some reason, your reproduction department needs to have me physically sign a copyright clearance, I will have to charge $75.00 US to cover my time and effort needed to deal with this. To obtain such clearance, please email me at the address above. Presumably, your printing and reproduction department can handle producing a master copy from PDF files. If not, I can print a master

copy on a laser printer (800x400dpi), please email me for the current cost of this service. All other rights to this text are expressly reserved by the author. In particular, it is a copyright violation to • • Beta Draft - Do not distribute Post this text (or some portion thereof) on some web site without prior approval. Reproduce this text in printed or electronic form for non-personal (e.g, commercial) use 2001, By Randall Hyde Page 7 Chapter One Volume 1 The software accompanying this text is all public domain material unless an explicit copyright notice appears in the software. Feel free to use the accompanying software in any way you feel fit • How to Get a Hard Copy of This Text This text is distributed in electronic form only. It is not available in hard copy form nor do I personally intend to have it published. If you want a hard copy of this text, the copyright allows you to print one for yourself. The PDF distribution format makes this possible (though the

length of the text will make it somewhat expensive). If you’re wondering why I don’t get this text published, there’s a very simple reason: it’s too long. Publishing houses generally don’t want to get involved with texts for specialized subjects as it is; the cost of producing this text is prohibitive given its limited market. Rather than cut it down to the 500 or so 6” x 9” pages that most publishers would accept, my decision was to stick with the full text and release the text in electronic form on the Internet. The upside is that you can get a free copy of this text; the downside is that you can’t readily get a hard copy. Note that the copyright notice forbids you from copying this text for anything other than personal use (without permission, of course). If you run a “Print to Order/Custom Textbook” publishing house and would like to make copies for people, feel free to contact me and maybe we can work out a deal for those who just have to have a hard copy of

this text. • Obtaining Program Source Listings and Other Materials in This Text All of the software appearing in this text is available from the Webster web site. The URL is http://webster.csucredu The data might also be available via ftp from the following Internet address: ftp.csucredu Log onto ftp.csucredu using the anonymous account name and any password Switch to the “/pub/ pc/ibmpcdir” subdirectory (this is UNIX so make sure you use lowercase letters). You will find the appropriate files by searching through this directory. The exact filename(s) of this material may change with time, and different services use different names for these files. Check on Webster for any important changes in addresses If for some reason, Webster disappears in the future, you should use a web-based search engine like “AltaVista” and search for “Art of Assembly” to locate the current home site of this material. • Where to Get Help If you’re reading this text and you’ve got

questions about how to do something, please post a message to one of the following Internet newsgroups: comp.langasmx86 alt.langasm Hundreds of knowledgeable individuals frequent these newsgroups and as long as you’re not simply asking them to do your homework assignment for you, they’ll probably be more than happy to help you with any problems that you have with assembly language programming. I certainly welcome corrections and bug reports concerning this text at my email address. However, I regret that I do not have the time to answer general assembly language programming questions via email. I do provide support in public forums (eg, the newsgroups above and on Webster at http://webstercsucredu) so please use those avenues rather than emailing questions directly Page 8 2001, By Randall Hyde Beta Draft - Do not distribute Foreward to me. Due to the volume of email I receive daily, I regret that I cannot reply to all emails that I receive; so if you’re looking for a

response to a question, the newsgroup is your best bet (not to mention, others might benefit from the answer as well). • Other Materials You Will Need In addition to this text and the software I provide, you will need a machine running a 32-bit version of Windows (Windows 9x, NT, 2000, ME, etc.), a copy of Microsoft’s MASM and a 32-bit linker, some sort of text editor, and other rudimentary general-purpose software tools you normally use. MASM and MS-Link are freely available on the internet Alas, the procedure you must follow to download these files from Microsoft seems to change on a monthly basis. However, a quick post to comp.langasmx86 should turn up the current site from which you may obtain this software Almost all the software you need to use this text is part of Windows (e.g, a simple text editor like Notepad.exe) or is freely available on the net (MASM, LINK, and HLA) You shouldn’t have to purchase anything. Beta Draft - Do not distribute 2001, By Randall Hyde

Page 9 Chapter One Page 10 Volume 1 2001, By Randall Hyde Beta Draft - Do not distribute Hello, World of Assembly Language Hello, World of Assembly Language 2.0 Chapter Two Chapter Overview This chapter is a “quick-start” chapter that lets you start writing basic assembly language programs right away. This chapter presents the basic syntax of an HLA (High Level Assembly) program, introduces you to the Intel CPU architecture, provides a handful of data declarations and machine instructions, describes some utility routines you can call in the HLA Standard Library, and then shows you how to write some simple assembly language programs. By the conclusion of this chapter, you should understand the basic syntax of an HLA program and be prepared to start learning new language features in subsequent chapters. Note: this chapter assumes that you have successfully installed HLA on your system. Please see Appendix I for details concerning the installation of HLA (alternately,

you can read the HLA documentation or check out the laboratory exercises associated with this volume). 2.1 The Anatomy of an HLA Program An HLA program typically takes the following form: program pgmID ; These identifiers specify the name of the program. They must all be the same identifier. Declarations The declarations section is where you declare constants, types, variables, procedures, and other objects in an HLA program. begin pgmID ; Statements The Statements section is where you place the executable statements for your main program. end pgmID ; PROGRAM, BEGIN, and END are HLA reserved words that delineate the program. Note the placement of the semicolons in this program. Figure 2.1 Basic HLA Program Layout The pgmID in the template above is a user-defined program identifier. You must pick an appropriate, descriptive, name for your program. In particular, pgmID would be a horrible choice for any real program If you are writing programs as part of a course assignment,

your instructor will probably give you the name to use for your main program. If you are writing your own HLA program, you will have to choose this name Identifiers in HLA are very similar to identifiers in most high level languages. HLA identifiers may begin with an underscore or an alphabetic character, and may be followed by zero or more alphanumeric or underscore characters. HLA’s identifiers are case neutral This means that the identifiers are case sensitive insofar as you must always spell an identifier exactly the same way (even with respect to upper and lower case) in your program. However, unlike other case sensitive languages, like C/C++, you may not declare two identifiers in the program whose name differs only by the case of alphabetic characters appearing in an identifier. Case neutrality enforces the good programming style of always spelling your names exactly the same way (with respect to case) and never declaring two identifiers whose only difference is the case of

certain alphabetic characters. Beta Draft - Do not distribute 2001, By Randall Hyde Page 11 Chapter Two Volume 1 A traditional first program people write, popularized by K&R’s “The C Programming Language” is the “Hello World” program. This program makes an excellent concrete example for someone who is learning a new language. Here’s what the “Hello World” program looks like in HLA: program helloWorld; #include( “stdlib.hhf” ); begin helloWorld; stdout.put( “Hello, World of Assembly Language”, nl ); end helloWorld; Program 2.1 The Hello World Program The #include statement in this program tells the HLA compiler to include a set of declarations from the stdlib.hhf (standard library, HLA Header File) Among other things, this file contains the declaration of the stdout.put code that this program uses The stdout.put statement is the typical “print” statement for the HLA language You use it to write data to the standard output device (generally

the console). To anyone familiar with I/O statements in a high level language, it should be obvious that this statement prints the phrase “Hello, World of Assembly Language”. The nl appearing at the end of this statement is a constant, also defined in “stdlib.hhf”, that corresponds to the newline sequence. Note that semicolons follow the program, BEGIN, stdout.put, and END statements1 Technically speaking, a semicolon is generally allowable after the #INCLUDE statement It is possible to create include files that generate an error if a semicolon follows the #INCLUDE statement, so you may want to get in the habit of not putting a semicolon here (note, however, that the HLA standard library include files always allow a semicolon after the corresponding #INCLUDE statement). The #INCLUDE is your first introduction to HLA declarations. The #INCLUDE itself isn’t actually a declaration, but it does tell the HLA compiler to substitute the file “stdlib.hhf” in place of the #INCLUDE

directive, thus inserting several declarations at this point in your program. Most HLA programs you will write will need to include at least some of the HLA Standard Library header files (“stdlib.hhf” actually includes all the standard library definitions into your program; for more efficient compiles, you might want to be more selective about which files you include. You will see how to do this in a later chapter) Compiling this program produces a console application. Under Win322, running this program in a command window prints the specified string and then control returns back to the Windows command line interpreter 2.2 Some Basic HLA Data Declarations HLA provides a wide variety of constant, type, and data declaration statements. Later chapters will cover the declaration section in more detail but it’s important to know how to declare a few simple variables in an HLA program. 1. Technically, from a language design point of view, these are not all statements However, this

chapter will not make that distinction. 2. This text will use the phrase Win32 to denote any version of 32-bit version of Windows including Windows NT, Windows 95, Windows 98, Windows 2000, and later versions of Windows that run on processors supporting the Intel 32-bit 80x86 instruction set. Page 12 2001, By Randall Hyde Beta Draft - Do not distribute Hello, World of Assembly Language HLA predefines three different signed integer types: int8, int16, and int32, corresponding to eight-bit (one byte) signed integers, 16-bit (two byte) signed integers, and 32-bit (four byte) signed integers respectively3. Typical variable declarations occur in the HLA static variable section A typical set of variable declarations takes the following form static i8: int8; i8, i16, and i32 i16: int16; are the names of i32: int32; the variables to "static" is the keyword that begins the variable declaration section. int8, int16, and int32 are the names of the data types for each

declaration declare here. Figure 2.2 Static Variable Declarations Those who are familiar with the Pascal language should be comfortable with this declaration syntax. This example demonstrates how to declare three separate integers, i8, i16, and i32. Of course, in a real program you should use variable names that are a little more description While names like “i8” and “i32” describe the type of the object, they do not describe it’s purpose. Variable names should describe the purpose of the object. In the STATIC declaration section, you can also give a variable an initial value that the operating system will assign to the variable when it loads the program into memory. The following figure demonstrates the syntax for this: The constant assignment operator, ":=" tells HLA that you wish to initialize the specified variable with an initial value. Figure 2.3 static i8: int8 := 8; i16: int16 := 1600; i32: int32 := -320000; The operand after the constant assignment

operator must be a constant whose type is compatible with the variable you are initializing Static Variable Initialization It is important to realize that the expression following the assignment operator (“:=”) must be a constant expression. You cannot assign the values of other variables within a STATIC variable declaration Those familiar with other high level languages (especially Pascal) should note that you may only declare one variable per statement. That is, HLA does not allow a comma delimited list of variable names followed by a colon and a type identifier. Each variable declaration consists of a single identifier, a colon, a type ID, and a semicolon. Here is a simple HLA program that demonstrates the use of variables within an HLA program: Program DemoVars; #include( “stdlib.hhf” ); static InitDemo: int32 := 5; 3. A discussion of bits and bytes will appear in the next chapter if you are unfamiliar with these terms Beta Draft - Do not distribute 2001, By Randall

Hyde Page 13 Chapter Two Volume 1 NotInitialized: int32; begin DemoVars; // Display the value of the pre-initialized variable: stdout.put( “InitDemo’s value is “, InitDemo, nl ); // Input an integer value from the user and display that value: stdout.put( “Enter an integer value: “ ); stdin.get( NotInitialized ); stdout.put( “You entered: “, NotInitialized, nl ); end DemoVars; Program 2.2 Variable Declaration and Use In addition to STATIC variable declarations, this example introduces three new concepts. First, the stdoutput statement allows multiple parameters If you specify an integer value, stdoutput will convert that value to the string representation of that integer’s value on output. The second new feature this sample program introduces is the stdinget statement This statement reads a value from the standard input device (usually the keyboard), converts the value to an integer, and stores the integer value into the NotInitialized variable. Finally, this